The Perfect AI Prompt Writing System for ChatGPT & Claude

Lessons from Building a 700+ Prompt AI Writing System.

Written by special guest contributor, Tim Hanson → CCO at Penfriend.ai

I’ve built over 1,500 prompts and prompt sequences over the last 2 years.

I say built, because I have a system to make prompts that work.

In my 3,000 hours writing prompts → I’ve seen patterns in what works and what doesn’t…

What gets great results consistently, and what falls flat on it’s face.

And most importantly, what is the difference between great prompts that do the work for you, versus poor prompts that you waste time creating and never use again.

So for you, fellow Autopreneurs…I give you my entire system.

Use it to build any prompt you want.

Why Most Prompts Fail

The biggest problem with most prompts isn't that they're "bad" - it's that they're incomplete.

People throw a vague request at an LLM and hope for the best. It's like walking into a restaurant and saying "food" instead of ordering from the menu.

The Seven Essential Components

Every prompt needs at least three of these seven components to work reliably:

Role

Task

Steps

Context/Constraints

Goal

Formatting

Output

Let's go scuba mode on each one.

At the end, we’ll put it all together into 1 prompting template you can use as a guide…

1. Role: Who's Doing the Work?

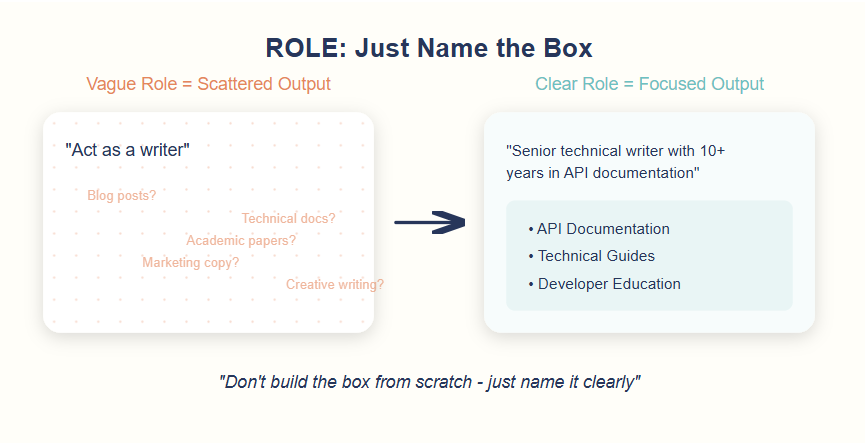

Most people know about the ROLE. It’s a common input. You’re essentially defining the box in which the LLM can play in. If you’re vague, you get vague answer. If you’re specific, you get specific answer. Really isn’t much more to it than this.

Bad role: "Act as a writer"

Good role: "Senior technical writer with 10+ years experience in API documentation and developer education"

The difference? The second one gives the LLM specific expertise domains to draw from.

My favourite way to write this is like so:

Adopt the role of X with elements of role Y

A lot of the time when we prompt we’re actually asking the LLM to take into account multiple points of view, or at least one primary role with a couple dashes of other ideas.

“Adopt the role of _____” seems to keep the creativity alive over a prompt like, “You are a ___” which is more rigid and exacting.

It removes a lot of thing thinking and reasoning side of the LLM.

You can, should you wish, go more complex than this on what the role is. BUT, I’ve found it really isn’t necessary.

Give the box a name, don’t build the box from scratch.

2. Task: What Needs to Be Done?

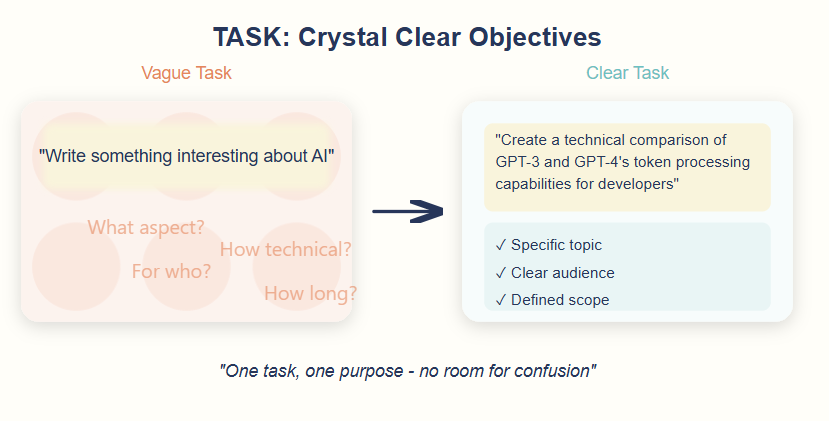

Your Task section needs to be crystal clear about the objective.

One task, one purpose.

Poorly written task: "Write something interesting about AI"

Clearly written task: "Create a technical comparison of GPT-3 and GPT-4's token processing capabilities, focusing on practical implications for developers"

See how the second one leaves no room for confusion?

3. Steps: The Road Map

Steps break down how to accomplish the task. This is optional but powerful when you need specific methodology followed.

Example:

## STEPS

1. Define key technical terms

2. Compare processing speeds

3. Analyze token handling differences

4. Provide practical implementation tips

5. Summarize key takeawaysYou can get by without doing this.

The steps section is for when you know how to do a task and need specific things doing every time.

The best way to work out these steps is to do the task yourself. Screen record yourself and talk through the steps. Then throw them into a new prompt asking it to summarise the transcript into steps for you.

Riverside fm has an amazing transcription service and it’s completely free. Highly recommend.

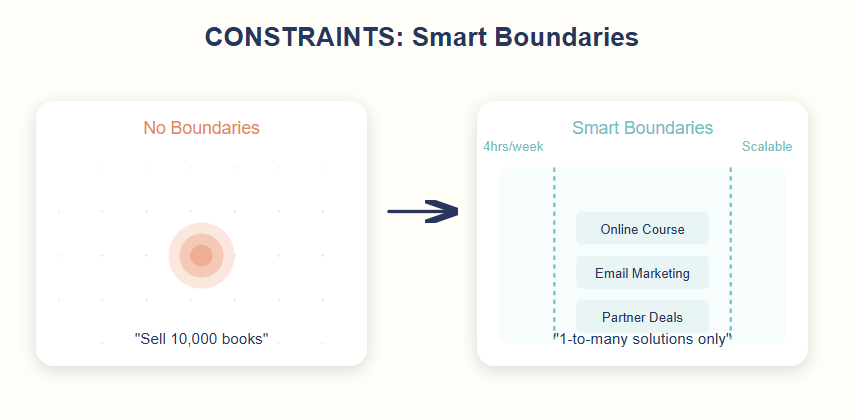

4. Context/Constraints: Setting the Boundaries

Context and constraints tell the LLM what it's working with and what limits it needs to respect.

Example:

## CONTEXT

- Article targets senior developers

- Follows section on basic NLP concepts

- Requires specific code examples

## CONSTRAINTS

- No basic explanations of what tokens are

- Code examples must be in Python

- Keep technical accuracy over accessibilityThe boundaries are the walls to your box. They are the things that make the task tangible.

Think about it like this, if I wanted to come up with a business plan to sell 10,000 books this year I could have 10,000 one to one sales calls about my book. But that sounds like a shit way to spend the year.

So my constraint could be “Without spending more than 4 hours a week promoting it”, or “Only suggest scalable 1 to many solutions.”

5. Goal: The End Game

Your Goal section defines what success looks like.

What should this prompt achieve?

Probably the most optional of the sections, but I’ve found having bit here like how do you want it to read, how should it flow, how should it make the reader feel etc have the biggest impact in this seciton.

Example:

## GOAL

Create a technical comparison that enables developers to make informed decisions about which model to use for their specific use case, with concrete examples and metrics.6. Formatting: The Structure

Formatting isn't about making things pretty - it's about ensuring consistent structure. This is crucial if you're feeding the output into another process (or prompt).

Example:

## FORMAT

- Use H2 (##) for main sections

- Code in triple backticks with language specified

- Key terms in bold

- Metrics in tables

- Examples in bullet pointsIf you’re using the prompt over and over again, this is the second most important step. You want to copy it into a sheet? Then specify that. If you want it to have bullets, or be broken down in a certain way. Then say it.

Literally give an formatting example to get the same thing over and over again.

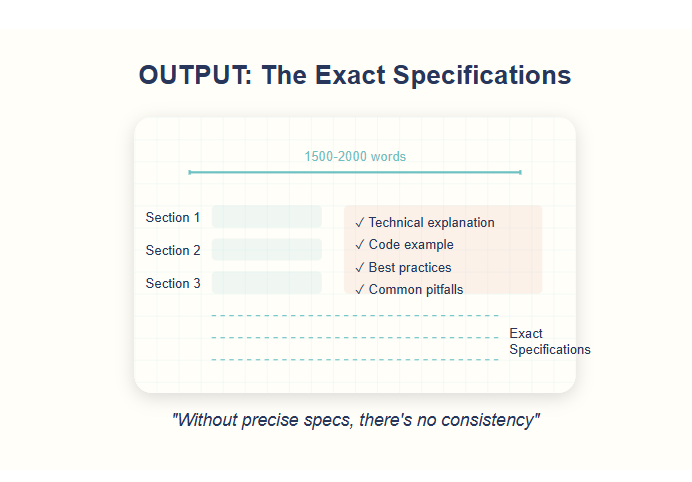

7. Output: The Most Important Part

This is where most prompts fall apart.

Your Output section needs to specify exactly what you want to see.

Without this Output section, the LLM will present it’s answers in whatever way it thinks is best.

Now, don’t get me wrong, there is merit in this, but we’re after something we can use to replace a job. Something consistent.

This is where the consistency comes from → defining exactly what you’re after.

Example: Technical API Documentation

## OUTPUT

Generate API endpoint documentation with:

- Structure:

- Endpoint summary (50-75 words)

- Authentication requirements

- Request format

- Response format

- Error codes

- Rate limits

- Must include for each endpoint:

- HTTP method

- Full URL path

- Required/optional parameters

- Response object structure

- 3 example requests

- 3 example responses (success, error, edge case)

- Code examples in:

- cURL

- Python

- JavaScript

- Format requirements:

- Parameters in table format

- Code in triple backticks with language

- Response schemas in JSON

- Status codes with emoji indicatorsExample: Data Analysis Report

## OUTPUT

Produce a data analysis report with:

- Length: 2000-2500 words

- Required sections:

1. Executive Summary (250 words)

2. Methodology (300 words)

3. Key Findings (1000 words)

4. Limitations (200 words)

5. Recommendations (250 words)

- Each key finding must include:

- Statistical significance

- Confidence interval

- Real-world implication

- Visualization specification

- Technical requirements:

- All numbers rounded to 2 decimal places

- Percentages include sample size

- P-values in scientific notation

- Effect sizes with Cohen's d

- Visualization specs:

- Type of chart for each metric

- Color schemes (primary/secondary)

- Axis labels and units

- Legend placement

- Format:

- Tables for comparative data

- Footnotes for assumptions

- Citations in APA style

- Formulas in LaTeXUse These to Create Your Master Prompt

Here's our complete prompt example, that uses all seven components we discussed:

## ROLE

Senior technical content strategist with expertise in API documentation and developer education

## TASK

Create a comprehensive guide about implementing rate limiting in REST APIs

## STEPS

1. Define rate limiting concepts

2. Compare implementation methods

3. Provide code examples

4. Outline best practices

5. Address common pitfalls

## CONTEXT

- Audience: Backend developers

- Knowledge level: Intermediate to advanced

- Part of larger API design series

## CONSTRAINTS

- Focus on practical implementation

- Use Python and Node.js examples

- No basic REST API explanations

- Keep security considerations primary

## GOAL

Enable developers to implement production-grade rate limiting with clear understanding of tradeoffs and best practices

## FORMAT

- H2 for main sections

- H3 for subsections

- Code in triple backticks

- Tables for comparisons

- Bullet points for lists

## OUTPUT

Deliver technical guide:

- Length: 1500-2000 words

- 5 main sections

- Each section must include:

- Technical explanation

- Code example

- Best practices

- Common pitfalls

- Must include:

- Performance considerations

- Security implications

- Scaling factorsCopy this prompt above. You can create a template from it to use repeatedly when building your own prompts.

Also, bookmark this article whenever you’re stuck and need help.

The Secret to Scaling

When your output format is precisely defined, you can feed it into other prompts or processes reliably.

Inside our operation at Penfriend.ai, we even use prompts to reformat the output from another prompt, so it works better as the input for our next task in the sequence.

Think of this process like a production line: each station needs to receive materials in a specific format to do its job properly.

Advanced Applications: A Peek Behind the Curtain

At Penfriend.ai, we take this basic structure and build entire systems with it.

We have prompts that generate other prompts, prompts that reformat outputs for the next prompt in the chain, and prompts that handle edge cases.

Here’s the best part: every single one of our specialized prompts still follows this same seven-component structure.

Even our error-handling agents (which catch and fix issues in the output) use these same building blocks. The complexity comes from how we chain things together, not from making the prompts themselves more complex.

If anything we break the prompts down even further, at times going as far to have a different prompt for each step in the “steps” sequence…so we can get crazy granular on exactly what each prompt needs to be doing.

Testing Your Prompts

Want to know if your prompt is solid?

Run it multiple times.

The output should be consistently good. If it's not, look at which of the seven components might need more definition.

The most common issue? Vague output specifications.

If you're not getting consistent results, start by making your Output section more detailed.

Prompt Snippets

There are a bunch of things you can add to prompts to make them work better.

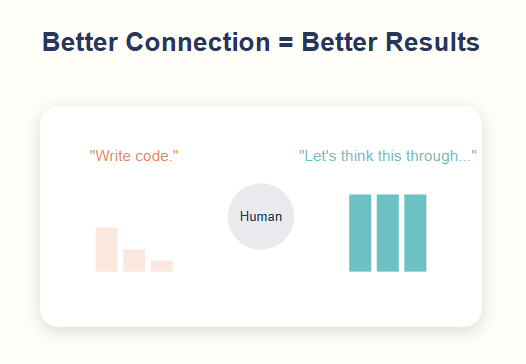

The more you talk to the prompt like a human, the better output you get. Even going as far as asking how good it is at the job you’re asking it to do, and praising it for operating on all cylinders today.

The opposite is also true.

If you have things you don’t want it to do, telling the prompt you’ll fine it $1,000 every time it does something wrong, should remove that issue entirely.

Get Great Outputs, Consistently

Tell the prompt it’s allowed to think out loud, before committing to your formatting.

Prompting the LLM to tell you how it’s working through its tasks, will give you more defined outputs.

And when it’s done with it’s thinking, the AI conforms to the formatting you had before, still giving you a perfectly usable outcome…but now it’s 10x better than it would be otherwise.

Get Started: Creating Your Own Prompts

Start with three components: Role, Task, and Output

Add others as needed for clarity

Be extremely specific in your Output section

Test multiple times

Refine based on results

Remember: You don't need all seven components every time, but you do need to be specific about the ones you use.

It’s not magic…It’s a system.

Perfect outputs aren't magic - they're engineered .

Whether you're writing a single prompt or building a system of hundreds, these seven components give you a basic structure for reliable outputs.

Start small, be specific, and always define your outputs clearly.

The rest will follow.

Now go build something amazing.

✌️Tim

CCO, Penfriend.ai

Hey Autopreneur!

It’s Ryan here…

Just wanted to give a quick shoutout to our newset community members who joined The Automation Lab:

Sabrina Ahmed - Burnout Coach & Neuroscientist.

Jonnie Perry - Ghostwriter for Agency Owners.

Sivakuma Margabandhu - Aspiring IT Coach.

Artia Hawkins - Copywriter for Entrepreneurs.

Michele Parad - Spiritual Business Consultant.

Max Bernstein - Marketing & Positioning Expert.

Matt Rubenstein - ECommerce Strategist & Consultant.

Pushvinder Gill - Retired Telecom Director.

Jessica Encell Coleman - Human Connection Expert.

Dana Daskalova - LinkedIn Coach.

Jay Melone - Growth & Scalability Strategist.

Chris Mielke - AI Empowered Project Manager.

Elliot Meade - Human Fulfillment & Lifestyle Design Coach.

These entrepreneurs all wanted more for themselves in 2025…and took the first step by joining our growing community. And they’re getting results:

Living proof that anyone, across any industry, can upskill in AI & Automation.

Join us today and get instant access to my premier AI Systems, Workflows, and Business Strategies to grow your business online.

This exactly where 1 guide is enough to master a topic comprehensibly.

Thanks for sharing Ryan!

This is such an awesome lesson in prompt writing. I need to spend a few hours digesting this as there is so much good information. Using COSTAR and AUTOMATE have been leaps forward but I’ve never read an explanation for a framework as detailed as this.